Gradient Descent/Ascent is a great asset. If you have an approximation model consisting of some parameters ![]() and a cost function,

and a cost function, ![]() , then you can update the parameters

, then you can update the parameters ![]() with the gradient of

with the gradient of ![]() wrt to

wrt to ![]() . In value approximation methods we try to approximate

. In value approximation methods we try to approximate ![]() or

or ![]() and we use a policy like

and we use a policy like ![]() -greedy for control. The problem with

-greedy for control. The problem with ![]() -greedy is that we have to choose a ‘max’ over all possible actions which is really time-consuming for enviroments with continous action space. Also, sometimes we need a stochastic policy like a Gaussian policy which would mostly choose actions around its mean but also ensures randomness in selection of an action. In such cases, its better to learn a policy for a particular environment that maximizes reward, and to do so we need gradients for our policy parameters. In this post we will look into different aspects of policy gradients and derive the necessary proofs.

-greedy is that we have to choose a ‘max’ over all possible actions which is really time-consuming for enviroments with continous action space. Also, sometimes we need a stochastic policy like a Gaussian policy which would mostly choose actions around its mean but also ensures randomness in selection of an action. In such cases, its better to learn a policy for a particular environment that maximizes reward, and to do so we need gradients for our policy parameters. In this post we will look into different aspects of policy gradients and derive the necessary proofs.

Let’s choose a policy ![]() with parameters

with parameters ![]() . Gradients

. Gradients ![]() depend on the choice of objective function. Formulating an objective function depends on the type of environment we are in. For an episodic environment:

depend on the choice of objective function. Formulating an objective function depends on the type of environment we are in. For an episodic environment:

![]()

which basically translates to the expected reward from our starting state.

For continuous or a never ending environment we use a stationary distribution, ![]() , which tells us distribution of states after the process has run for a sufficiently long time such that running the process anymore doesnt change the distribution. In such environments we look for averaging immediate rewards over the entire distribution states the we visit.

, which tells us distribution of states after the process has run for a sufficiently long time such that running the process anymore doesnt change the distribution. In such environments we look for averaging immediate rewards over the entire distribution states the we visit.

![]()

Let’s find policy gradients for both environments:

Episodic Environments:

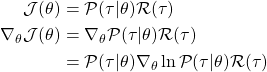

Let’s say the probability of a trajectory ![]() as

as ![]() and the reward for the episode as

and the reward for the episode as ![]() . So put simply the total rewards here for an episode is the probability of the trajectory of the episode times the total end reward

. So put simply the total rewards here for an episode is the probability of the trajectory of the episode times the total end reward

![]() can be rewritten as

can be rewritten as ![]() since

since

(1) ![]()

The probablility of a trajectory ![]() can be defined as:

can be defined as:

where ![]() is the probability of first state and

is the probability of first state and ![]() is the probalility transition among states as governed by the environment. So,

is the probalility transition among states as governed by the environment. So,

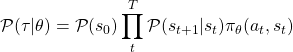

Now we formulated this loss for one episode. Lets say if we have D trajectories, then this turns into an expectation which we can estimate with sample mean.

(2) ![Rendered by QuickLaTeX.com \begin{align*}\nabla_\theta \mathcal{J}(\theta) &= \sum_{\tau}^{D}\mathcal{P}(\tau \vert \theta)\sum_{t}^{T}\nabla_\theta \ln \pi_\theta(a_t,s_t) \mathcal{R}(\tau) \nonumber \\&= \mathbb{E}_{\tau \sim \pi_\theta} [\sum_{t}^{T}\nabla_\theta \ln \pi_\theta(a_t,s_t) \mathcal{R}(\tau)] \\\end{align*}](https://akashe.io/wp-content/ql-cache/quicklatex.com-96a525b386bbf6862a50f4cbb044a0e7_l3.png)

Continous Environments:

Using average reward per time step as our cost function,

![]()

Finding gradients for continous environments is tricky. Changes in state distribution is a function of our policy as well as the environment. Since we dont know how environment works we dont know how our parameters ![]() affect

affect ![]() .

.

This is where policy gradient theorem comes in. Which says:

![]()

where, ![]() is a distribution wherein

is a distribution wherein ![]() ,which is a fraction of visits in that state divided by total visits in all states.

,which is a fraction of visits in that state divided by total visits in all states.

Also the proportionality constant is average length of episode in episodic environment and 1 in a continous environment.

![Rendered by QuickLaTeX.com \begin{align*}\nabla_\theta \mathcal{J}(\theta) &= \sum_{s \in \mathcal{S}} \mu(s) \sum_{a \in \mathcal{A}} \nabla_\theta \pi_\theta(a,s) q_\pi(s, a) \\&= \sum_{s \in \mathcal{S}} \mu(s) \sum_{a \in \mathcal{A}} \pi_\theta(a,s) \nabla_\theta \ln \pi_\theta(a,s) q_\pi(s, a) && \text{Using (1)} \\&= \mathbb{E}_{\pi_\theta} [\nabla_\theta \ln \pi_\theta(a,s) q_\pi(s, a)] \end{align*}](https://akashe.io/wp-content/ql-cache/quicklatex.com-f95a4836a68154397b8898e4460cbe12_l3.png)

**Note**: The policy gradient theorem also works for episodic cases.

Compatible Function Approximation Theorem

If you notice the gradients have the term ![]() and its true value is hard to come by so its easier to use a function approximator

and its true value is hard to come by so its easier to use a function approximator ![]() to estimate it . But the question is wouldn’t it introduce bias is the estimation of gradients. As it turns out if you play cards right you can almost approximate the true gradients based on

to estimate it . But the question is wouldn’t it introduce bias is the estimation of gradients. As it turns out if you play cards right you can almost approximate the true gradients based on ![]()

So, the Compatible Function Approximation Theorem has these two conditions:

1) gradients of ![]() = gradients of

= gradients of ![]()

(3) ![]()

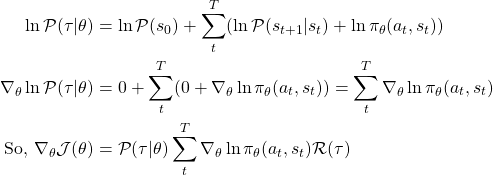

At first this condition seems bizzare like how can the gradients be same but it depends on the choice of the policy and the feature vectors used for value approximation. For example if you choose softmax policy after a linear transformation of feature vectors for discrete actions then the gradients of ![]() come out as:

come out as:

Now if choose our function approximator as:

![]()

Then, ![]() =

= ![]()

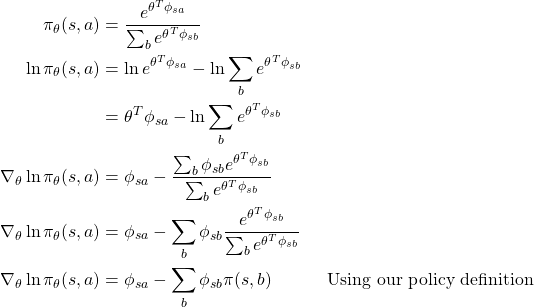

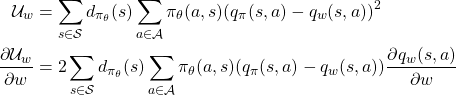

2) We are minimizing least square error between true value functions and our function approximations.

We can get these true value function values using differnt policy evaluation methods. But lets see how this condition enables estimating true gradient values with function approximators that follow condition 1. Since we are minimizing the mesn squarred error in the estimation of all possible ![]() values, so using a continous environment we get

values, so using a continous environment we get

When this gradient reaches a local minimum then

![Rendered by QuickLaTeX.com \begin{align*} 0 &= 2 \sum_{s \in \mathcal{S}} d_{\pi_\theta}(s) \sum_{a \in \mathcal{A}} \pi_\theta(a,s) (q_\pi(s,a) - q_w(s,a))\frac{\partial q_w(s,a)}{\partial w} \\0 &= \sum_{s \in \mathcal{S}} d_{\pi_\theta}(s) \sum_{a \in \mathcal{A}} \pi_\theta(a,s) (q_\pi(s,a) - q_w(s,a))\frac{\partial \ln \pi_\theta(s,a)}{\partial \theta} && \text{Using (3)} \\0 &= \sum_{s \in \mathcal{S}} d_{\pi_\theta}(s) \sum_{a \in \mathcal{A}} \pi_\theta(s,a) [\nabla_\theta \ln \pi_\theta(s,a) (q_\pi(s,a) - q_w(s,a)) ]\\0 &=\mathbb{E}_{\pi_\theta} [\nabla_\theta \ln \pi_\theta(s,a) (q_\pi(s,a) - q_w(s,a)) ] \\\mathbb{E}_{\pi_\theta} [\nabla_\theta \ln \pi_\theta(s,a) q_\pi(s,a)] &= \mathbb{E}_{\pi_\theta} [\nabla_\theta \ln \pi_\theta(s,a) q_w(s,a)]\end{align*}](https://akashe.io/wp-content/ql-cache/quicklatex.com-9b0065fd7f8825885d9d800c2c9ffc86_l3.png)

We can see now if both conditions are met then ![]() gives a really good estimate of the true polciy gradients of

gives a really good estimate of the true polciy gradients of ![]() .

.

Advantage Function

Till now we talked about reducing bias when using function approximators but what about variance in our gradients. Lets say our environments have Reward or Qvalues of vary from 0 to 10000. This results a huge variance in gradients. A natural approach is to use concept called baselines. A baseline is a function that doesnt depend on the actions or in other words on our policy. A natural choice for baseline is ![]() . Adding or substracting a baseline doesnt change the expectation of our gradients.

. Adding or substracting a baseline doesnt change the expectation of our gradients.

![Rendered by QuickLaTeX.com \begin{align*} \mathbb{E}_{\pi_\theta} [\nabla_\theta \ln \pi_\theta(s,a) V_\pi(s)] &= \sum_{s \in \mathcal{S}} d_{\pi_\theta}(s) \sum_{a \in \mathcal{A}} \nabla_\theta \pi_\theta(a,s) V_\pi(s) \\&= \sum_{s \in \mathcal{S}} d_{\pi_\theta}(s)V_\pi(s) \sum_{a \in \mathcal{A}} \nabla_\theta \pi_\theta(a,s) \\&= \sum_{s \in \mathcal{S}} d_{\pi_\theta}(s)V_\pi(s) \nabla_\theta \sum_{a \in \mathcal{A}} \pi_\theta(a,s) \\&= 0\end{align*}](https://akashe.io/wp-content/ql-cache/quicklatex.com-535b6a10d4c3bfb9afe77660ce7524fd_l3.png)

So our advantage function looks like ![]()

In practise we estimate advantage function as ![]() , using 2 seperate approximators and updating them using function approximation methods.

, using 2 seperate approximators and updating them using function approximation methods.